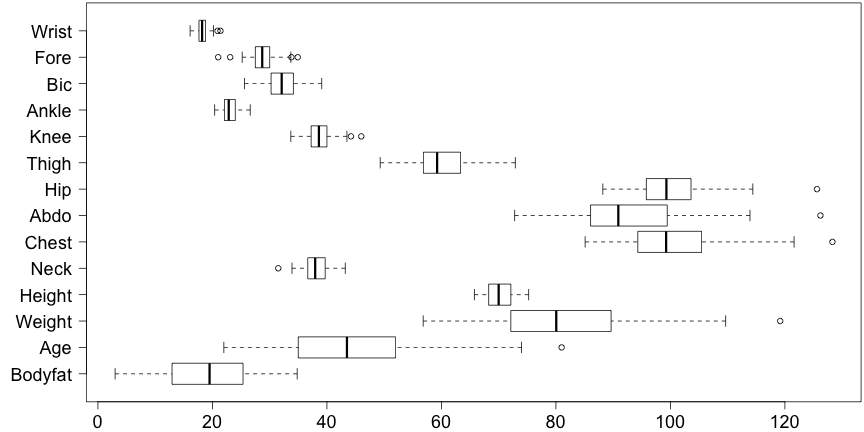

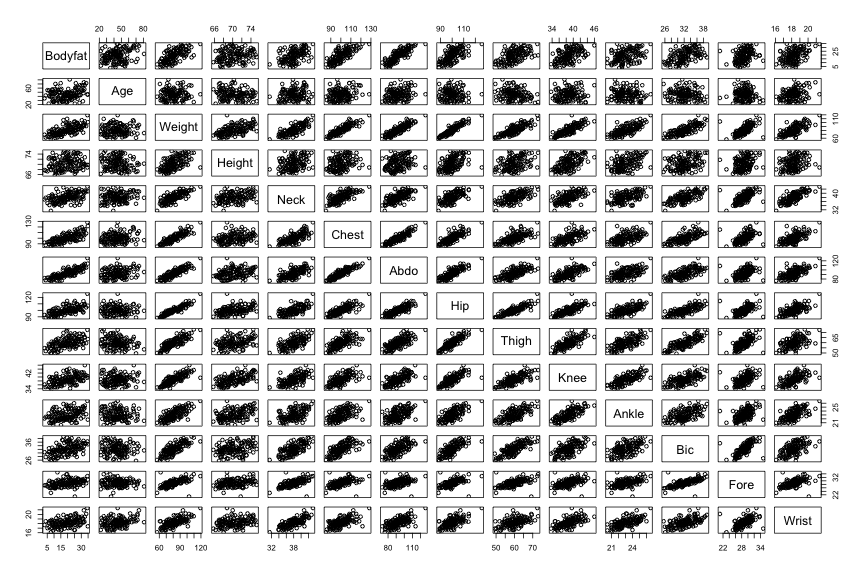

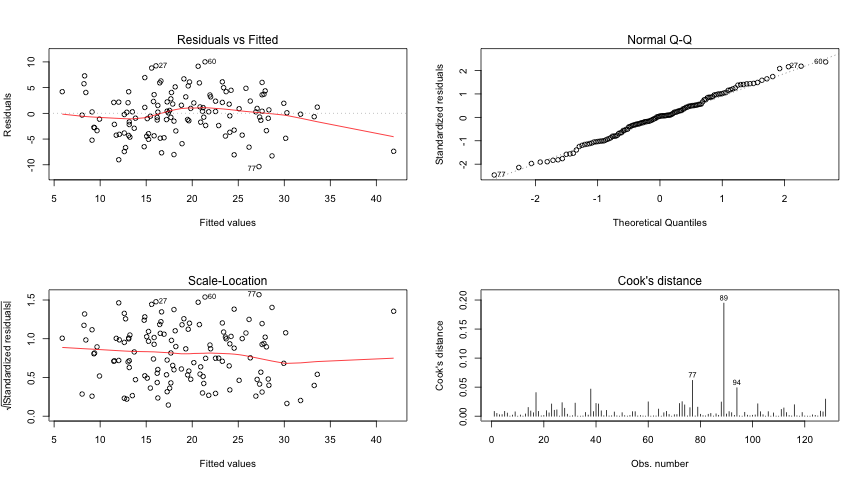

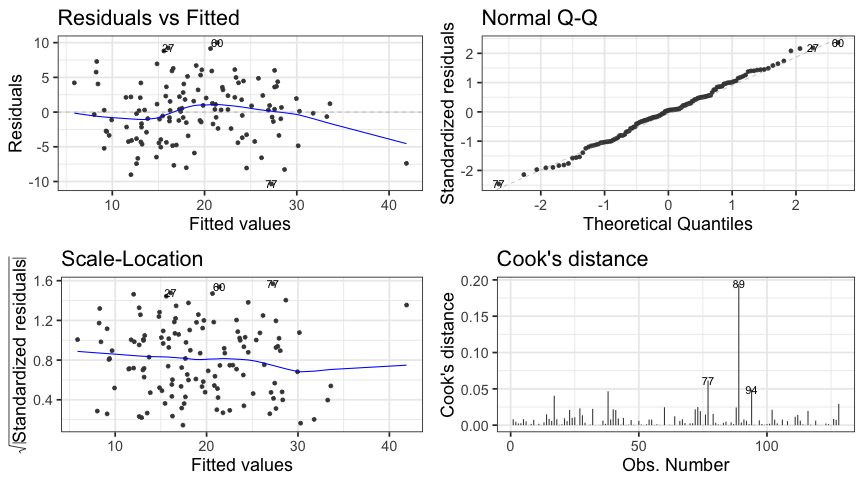

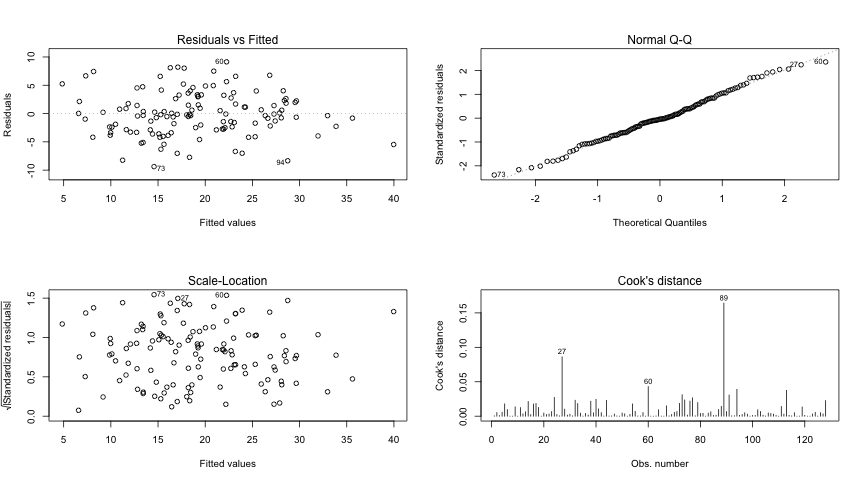

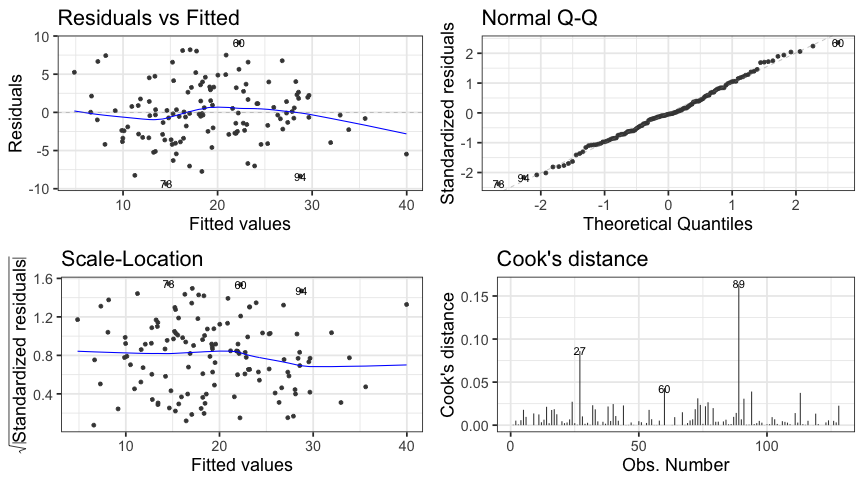

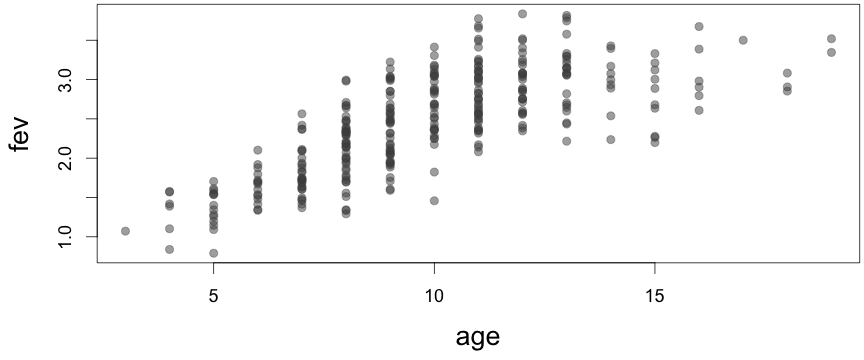

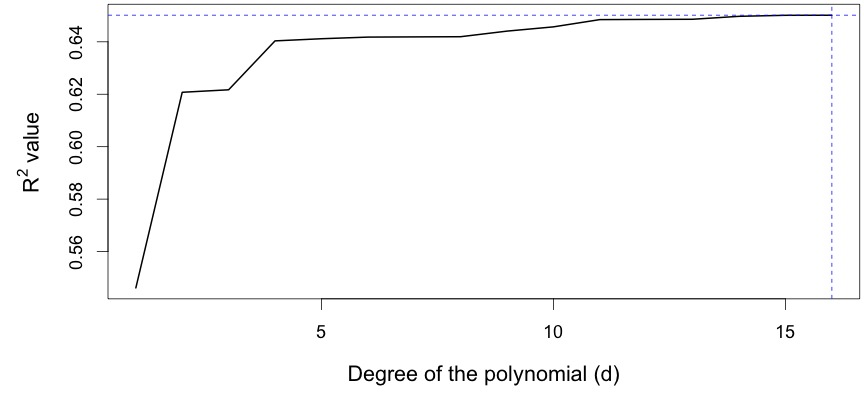

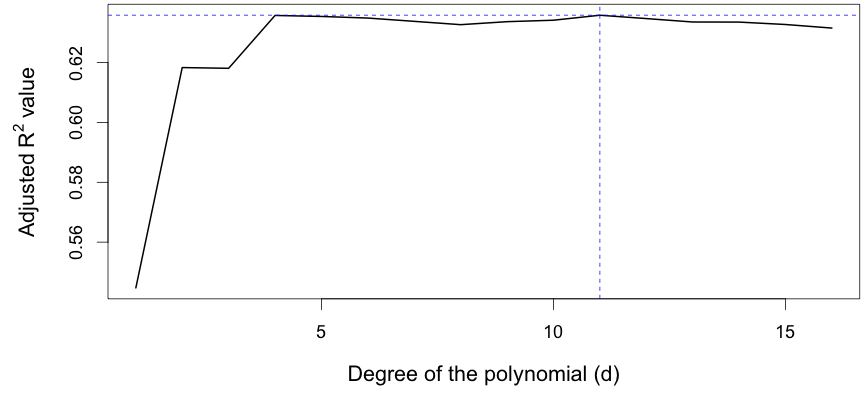

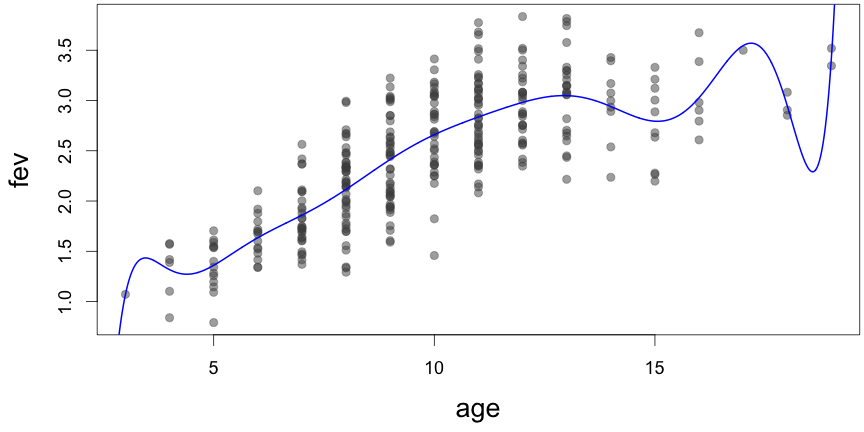

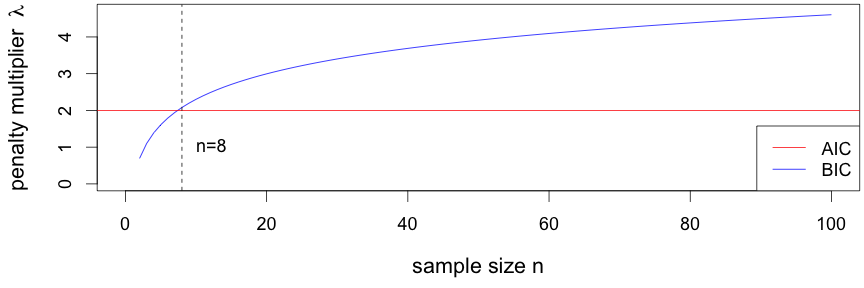

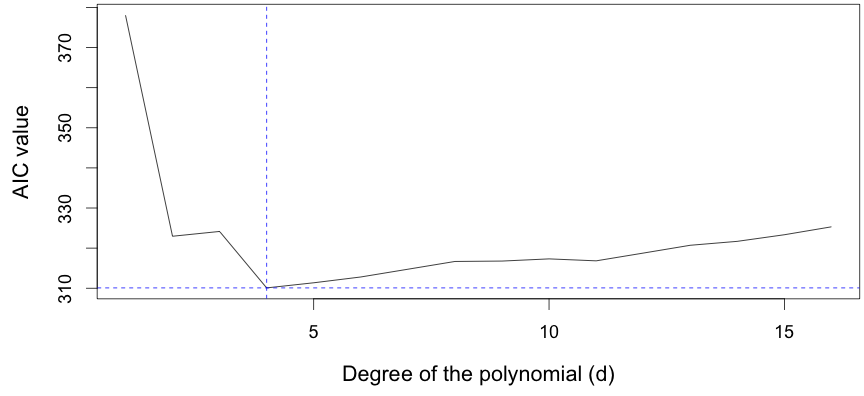

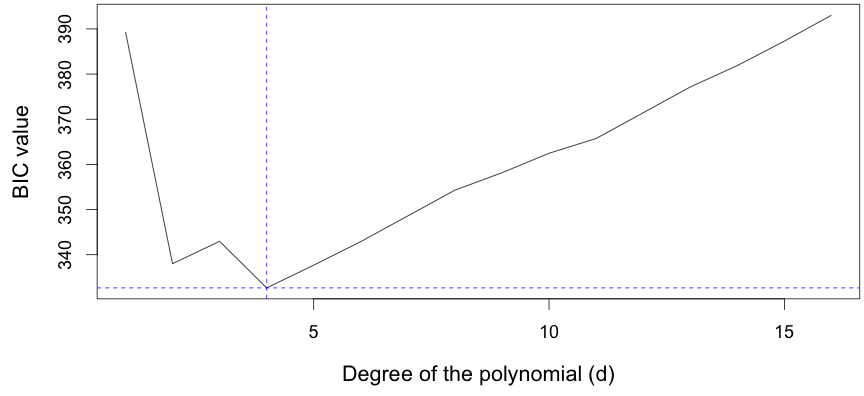

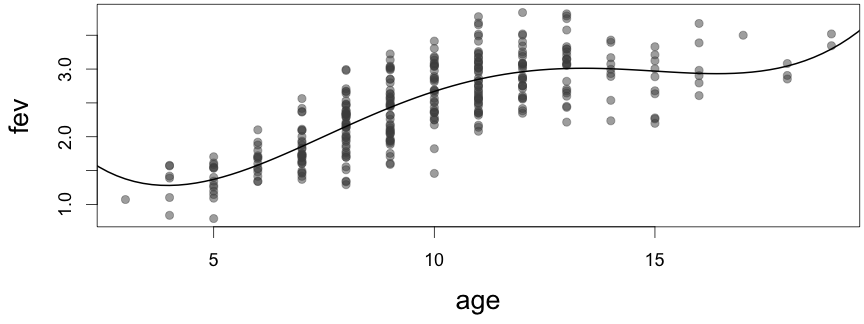

class: center, middle, inverse, title-slide # Lecture 1 ## Introduction to model selection ### Samuel Müller and Garth Tarr --- .blockquote[ ### "Doubt is not a pleasant mental state, but certainty is a ridiculous one." .right[— <cite>Voltaire (1694-1778)</cite>] ] .pull-left[ - .red[Necessity] to select .red.bold[models] is great despite philosophical issues - Myriad of model/variable selection methods based on .blue[loglikelihood], .red[RSS], prediction errors, .orange[resampling], etc ] .pull-right[ <!-- <img src="imgs/blackbox.jpg" width=700 /> --> <img src="imgs/blackbox.jpg" width="1344" /> ] --- ## Some motivating questions - How to .bold[.red[practically select model(s)]]? - How to cope with an ever .bold[.red[increasing number of observed variables]]? - How to .bold[.red[visualise]] the model building process? - How to assess the .bold[.red[stability]] of a selected model? ### Further aspects * Simultaneous estimation-selection or severing this (un)desirable link? * How to deal with outliers, influential points, missing values, multicollinearity, etc? --- ## Overview 1. .blue[Statistical models] - Assumptions - Possible models, model space - What makes a good model 2. .red["Classical"] model selection .red[criteria] - Residual sum of squares - `\(R^2\)` and adjusted `\(R^2\)` - Information criteria (e.g. AIC, BIC) 3. .blue[Stepwise search schemes] - Sequential testing - Backward stepwise methods --- class: segue # Statistical models --- class: eg ## Body fat data - `\(N=252\)` measurements of men (available in R package `mfp`) - .red[Training sample] size `\(n=128\)` (available in R package `mplot`) - .red[Response variable]: body fat percentage: `Bodyfat` - 13 or 14 .red[explanatory variables] ```r data("bodyfat", package = "mplot") dim(bodyfat) ``` ``` ## [1] 128 15 ``` ```r names(bodyfat) ``` ``` ## [1] "Id" "Bodyfat" "Age" "Weight" "Height" "Neck" ## [7] "Chest" "Abdo" "Hip" "Thigh" "Knee" "Ankle" ## [13] "Bic" "Fore" "Wrist" ``` .footnote[Source: Johnson (1996)] --- class: eg ## Body fat: Boxplots ```r bfat = bodyfat[, -1] # remove the ID column boxplot(bfat, horizontal = TRUE, las = 1) ``` <!-- --> --- class: eg <!-- # Body fat: Scatterplot matrix --> ```r pairs(bfat) ``` <!-- --> --- class: eg code80 ## Body fat: Correlation matrix ```r round(cor(bfat), 1) ``` ``` ## Bodyfat Age Weight Height Neck Chest Abdo Hip Thigh Knee Ankle Bic Fore Wrist ## Bodyfat 1.0 0.3 0.7 0.2 0.5 0.7 0.8 0.6 0.5 0.5 0.4 0.5 0.3 0.4 ## Age 0.3 1.0 0.0 -0.1 0.2 0.2 0.2 0.0 -0.2 0.1 -0.1 -0.1 -0.1 0.3 ## Weight 0.7 0.0 1.0 0.6 0.8 0.9 0.9 0.9 0.8 0.8 0.7 0.8 0.6 0.7 ## Height 0.2 -0.1 0.6 1.0 0.3 0.3 0.3 0.5 0.4 0.6 0.4 0.3 0.3 0.3 ## Neck 0.5 0.2 0.8 0.3 1.0 0.7 0.7 0.7 0.6 0.6 0.5 0.7 0.6 0.8 ## Chest 0.7 0.2 0.9 0.3 0.7 1.0 0.9 0.8 0.7 0.7 0.6 0.7 0.5 0.6 ## Abdo 0.8 0.2 0.9 0.3 0.7 0.9 1.0 0.8 0.7 0.7 0.6 0.7 0.5 0.6 ## Hip 0.6 0.0 0.9 0.5 0.7 0.8 0.8 1.0 0.9 0.8 0.7 0.8 0.5 0.6 ## Thigh 0.5 -0.2 0.8 0.4 0.6 0.7 0.7 0.9 1.0 0.7 0.7 0.8 0.5 0.5 ## Knee 0.5 0.1 0.8 0.6 0.6 0.7 0.7 0.8 0.7 1.0 0.7 0.6 0.5 0.6 ## Ankle 0.4 -0.1 0.7 0.4 0.5 0.6 0.6 0.7 0.7 0.7 1.0 0.6 0.5 0.6 ## Bic 0.5 -0.1 0.8 0.3 0.7 0.7 0.7 0.8 0.8 0.6 0.6 1.0 0.7 0.6 ## Fore 0.3 -0.1 0.6 0.3 0.6 0.5 0.5 0.5 0.5 0.5 0.5 0.7 1.0 0.5 ## Wrist 0.4 0.3 0.7 0.3 0.8 0.6 0.6 0.6 0.5 0.6 0.6 0.6 0.5 1.0 ``` --- class: eg ```r col = colorRampPalette(c("red", "white", "steelblue"))(20) heatmaply::heatmaply(cor(bfat), limits = c(-1, 1), colors = col, margins = c(70, 70), Rowv = NULL, Colv = NULL) ``` ``` ## Warning in config(l, displaylogo = FALSE, collaborate = FALSE, modeBarButtonsToRemove = ## c("sendDataToCloud", : The collaborate button is no longer supported ``` ``` ## Warning: 'config' objects don't have these attributes: 'collaborate' ## Valid attributes include: ## 'staticPlot', 'plotlyServerURL', 'editable', 'edits', 'autosizable', 'responsive', 'fillFrame', 'frameMargins', 'scrollZoom', 'doubleClick', 'showAxisDragHandles', 'showAxisRangeEntryBoxes', 'showTips', 'showLink', 'linkText', 'sendData', 'showSources', 'displayModeBar', 'showSendToCloud', 'modeBarButtonsToRemove', 'modeBarButtonsToAdd', 'modeBarButtons', 'toImageButtonOptions', 'displaylogo', 'watermark', 'plotGlPixelRatio', 'setBackground', 'topojsonURL', 'mapboxAccessToken', 'logging', 'queueLength', 'globalTransforms', 'locale', 'locales' ``` <div id="htmlwidget-5233f25bc3a082057104" style="width:792px;height:504px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-5233f25bc3a082057104">{"x":{"data":[{"x":[1,2,3,4,5,6,7,8,9,10,11,12,13,14],"y":[1,2,3,4,5,6,7,8,9,10,11,12,13,14],"z":[[0.520488217443768,0.42519287896855,0.755805391839332,0.398404375078238,0.800360094887168,0.704729427675962,0.6895102787714,0.703350503083516,0.621909177467968,0.67782818022503,0.699582145268417,0.657895559245597,0.611289707125732,1],[0.454579778056724,0.130723248326702,0.683310961942725,0.417840904995398,0.688199017689849,0.610355432862422,0.548039573381677,0.603565924700976,0.620785493779628,0.566276477363734,0.599285566355194,0.734008100124431,1,0.611289707125732],[0.579544624668937,0.112832582005641,0.820094185464628,0.42117444681385,0.739625596142456,0.750228193875699,0.719804420733728,0.795012548437261,0.824773675839879,0.695066196556442,0.629022111005089,1,0.734008100124431,0.657895559245597],[0.470412164127523,0.12066293259172,0.778477996057023,0.535911267887929,0.625298168766987,0.628456754073621,0.630560718677412,0.751689763617125,0.73865529978234,0.784100317329133,1,0.629022111005089,0.599285566355194,0.699582145268417],[0.576316172777821,0.249148752304993,0.853186908355778,0.64054816852424,0.679036217274322,0.71683680136541,0.741368631444644,0.813749249903182,0.786945139929237,1,0.784100317329133,0.695066196556442,0.566276477363734,0.67782818022503],[0.605220298099796,0,0.864103314565272,0.47356200983191,0.699792159307258,0.734904091770663,0.765126438523911,0.893954895067427,1,0.786945139929237,0.73865529978234,0.824773675839879,0.620785493779628,0.621909177467968],[0.691857217045358,0.141637775954217,0.944591390591219,0.5529316227882,0.733984914054102,0.836051917376652,0.872672680955329,1,0.893954895067427,0.813749249903182,0.751689763617125,0.795012548437261,0.603565924700976,0.703350503083516],[0.86625659444637,0.374464501543047,0.905059998966015,0.409206675529042,0.744534928541108,0.925483362341079,1,0.872672680955329,0.765126438523911,0.741368631444644,0.630560718677412,0.719804420733728,0.548039573381677,0.6895102787714],[0.783308162891277,0.352258039639758,0.910443027121432,0.39954675195937,0.771230868679397,1,0.925483362341079,0.836051917376652,0.734904091770663,0.71683680136541,0.628456754073621,0.750228193875699,0.610355432862422,0.704729427675962],[0.557441596310206,0.306614031237599,0.815221227580148,0.44496186614524,1,0.771230868679397,0.744534928541108,0.733984914054102,0.699792159307258,0.679036217274322,0.625298168766987,0.739625596142456,0.688199017689849,0.800360094887168],[0.298295139519881,0.052672496985855,0.633444457725067,1,0.44496186614524,0.39954675195937,0.409206675529042,0.5529316227882,0.47356200983191,0.64054816852424,0.535911267887929,0.42117444681385,0.417840904995398,0.398404375078238],[0.720124201471456,0.203108287510713,1,0.633444457725067,0.815221227580148,0.910443027121432,0.905059998966015,0.944591390591219,0.864103314565272,0.853186908355778,0.778477996057023,0.820094185464628,0.683310961942725,0.755805391839332],[0.383462054117047,1,0.203108287510713,0.052672496985855,0.306614031237599,0.352258039639758,0.374464501543047,0.141637775954217,0,0.249148752304993,0.12066293259172,0.112832582005641,0.130723248326702,0.42519287896855],[1,0.383462054117047,0.720124201471456,0.298295139519881,0.557441596310206,0.783308162891277,0.86625659444637,0.691857217045358,0.605220298099796,0.576316172777821,0.470412164127523,0.579544624668937,0.454579778056724,0.520488217443768]],"text":[["row: Wrist<br>column: Bodyfat<br>value: 0.42009","row: Wrist<br>column: Age<br>value: 0.30484","row: Wrist<br>column: Weight<br>value: 0.70468","row: Wrist<br>column: Height<br>value: 0.27245","row: Wrist<br>column: Neck<br>value: 0.75856","row: Wrist<br>column: Chest<br>value: 0.64291","row: Wrist<br>column: Abdo<br>value: 0.62450","row: Wrist<br>column: Hip<br>value: 0.64124","row: Wrist<br>column: Thigh<br>value: 0.54275","row: Wrist<br>column: Knee<br>value: 0.61037","row: Wrist<br>column: Ankle<br>value: 0.63668","row: Wrist<br>column: Bic<br>value: 0.58627","row: Wrist<br>column: Fore<br>value: 0.52990","row: Wrist<br>column: Wrist<br>value: 1.00000"],["row: Fore<br>column: Bodyfat<br>value: 0.34038","row: Fore<br>column: Age<br>value: -0.05128","row: Fore<br>column: Weight<br>value: 0.61700","row: Fore<br>column: Height<br>value: 0.29595","row: Fore<br>column: Neck<br>value: 0.62292","row: Fore<br>column: Chest<br>value: 0.52877","row: Fore<br>column: Abdo<br>value: 0.45341","row: Fore<br>column: Hip<br>value: 0.52056","row: Fore<br>column: Thigh<br>value: 0.54139","row: Fore<br>column: Knee<br>value: 0.47547","row: Fore<br>column: Ankle<br>value: 0.51539","row: Fore<br>column: Bic<br>value: 0.67832","row: Fore<br>column: Fore<br>value: 1.00000","row: Fore<br>column: Wrist<br>value: 0.52990"],["row: Bic<br>column: Bodyfat<br>value: 0.49151","row: Bic<br>column: Age<br>value: -0.07292","row: Bic<br>column: Weight<br>value: 0.78243","row: Bic<br>column: Height<br>value: 0.29998","row: Bic<br>column: Neck<br>value: 0.68511","row: Bic<br>column: Chest<br>value: 0.69793","row: Bic<br>column: Abdo<br>value: 0.66114","row: Bic<br>column: Hip<br>value: 0.75209","row: Bic<br>column: Thigh<br>value: 0.78809","row: Bic<br>column: Knee<br>value: 0.63122","row: Bic<br>column: Ankle<br>value: 0.55135","row: Bic<br>column: Bic<br>value: 1.00000","row: Bic<br>column: Fore<br>value: 0.67832","row: Bic<br>column: Wrist<br>value: 0.58627"],["row: Ankle<br>column: Bodyfat<br>value: 0.35953","row: Ankle<br>column: Age<br>value: -0.06345","row: Ankle<br>column: Weight<br>value: 0.73210","row: Ankle<br>column: Height<br>value: 0.43874","row: Ankle<br>column: Neck<br>value: 0.54685","row: Ankle<br>column: Chest<br>value: 0.55067","row: Ankle<br>column: Abdo<br>value: 0.55321","row: Ankle<br>column: Hip<br>value: 0.69970","row: Ankle<br>column: Thigh<br>value: 0.68394","row: Ankle<br>column: Knee<br>value: 0.73890","row: Ankle<br>column: Ankle<br>value: 1.00000","row: Ankle<br>column: Bic<br>value: 0.55135","row: Ankle<br>column: Fore<br>value: 0.51539","row: Ankle<br>column: Wrist<br>value: 0.63668"],["row: Knee<br>column: Bodyfat<br>value: 0.48761","row: Knee<br>column: Age<br>value: 0.09194","row: Knee<br>column: Weight<br>value: 0.82245","row: Knee<br>column: Height<br>value: 0.56529","row: Knee<br>column: Neck<br>value: 0.61183","row: Knee<br>column: Chest<br>value: 0.65755","row: Knee<br>column: Abdo<br>value: 0.68722","row: Knee<br>column: Hip<br>value: 0.77475","row: Knee<br>column: Thigh<br>value: 0.74234","row: Knee<br>column: Knee<br>value: 1.00000","row: Knee<br>column: Ankle<br>value: 0.73890","row: Knee<br>column: Bic<br>value: 0.63122","row: Knee<br>column: Fore<br>value: 0.47547","row: Knee<br>column: Wrist<br>value: 0.61037"],["row: Thigh<br>column: Bodyfat<br>value: 0.52256","row: Thigh<br>column: Age<br>value: -0.20937","row: Thigh<br>column: Weight<br>value: 0.83565","row: Thigh<br>column: Height<br>value: 0.36334","row: Thigh<br>column: Neck<br>value: 0.63694","row: Thigh<br>column: Chest<br>value: 0.67940","row: Thigh<br>column: Abdo<br>value: 0.71595","row: Thigh<br>column: Hip<br>value: 0.87175","row: Thigh<br>column: Thigh<br>value: 1.00000","row: Thigh<br>column: Knee<br>value: 0.74234","row: Thigh<br>column: Ankle<br>value: 0.68394","row: Thigh<br>column: Bic<br>value: 0.78809","row: Thigh<br>column: Fore<br>value: 0.54139","row: Thigh<br>column: Wrist<br>value: 0.54275"],["row: Hip<br>column: Bodyfat<br>value: 0.62734","row: Hip<br>column: Age<br>value: -0.03808","row: Hip<br>column: Weight<br>value: 0.93299","row: Hip<br>column: Height<br>value: 0.45933","row: Hip<br>column: Neck<br>value: 0.67829","row: Hip<br>column: Chest<br>value: 0.80173","row: Hip<br>column: Abdo<br>value: 0.84601","row: Hip<br>column: Hip<br>value: 1.00000","row: Hip<br>column: Thigh<br>value: 0.87175","row: Hip<br>column: Knee<br>value: 0.77475","row: Hip<br>column: Ankle<br>value: 0.69970","row: Hip<br>column: Bic<br>value: 0.75209","row: Hip<br>column: Fore<br>value: 0.52056","row: Hip<br>column: Wrist<br>value: 0.64124"],["row: Abdo<br>column: Bodyfat<br>value: 0.83825","row: Abdo<br>column: Age<br>value: 0.24349","row: Abdo<br>column: Weight<br>value: 0.88518","row: Abdo<br>column: Height<br>value: 0.28551","row: Abdo<br>column: Neck<br>value: 0.69105","row: Abdo<br>column: Chest<br>value: 0.90988","row: Abdo<br>column: Abdo<br>value: 1.00000","row: Abdo<br>column: Hip<br>value: 0.84601","row: Abdo<br>column: Thigh<br>value: 0.71595","row: Abdo<br>column: Knee<br>value: 0.68722","row: Abdo<br>column: Ankle<br>value: 0.55321","row: Abdo<br>column: Bic<br>value: 0.66114","row: Abdo<br>column: Fore<br>value: 0.45341","row: Abdo<br>column: Wrist<br>value: 0.62450"],["row: Chest<br>column: Bodyfat<br>value: 0.73794","row: Chest<br>column: Age<br>value: 0.21664","row: Chest<br>column: Weight<br>value: 0.89169","row: Chest<br>column: Height<br>value: 0.27383","row: Chest<br>column: Neck<br>value: 0.72333","row: Chest<br>column: Chest<br>value: 1.00000","row: Chest<br>column: Abdo<br>value: 0.90988","row: Chest<br>column: Hip<br>value: 0.80173","row: Chest<br>column: Thigh<br>value: 0.67940","row: Chest<br>column: Knee<br>value: 0.65755","row: Chest<br>column: Ankle<br>value: 0.55067","row: Chest<br>column: Bic<br>value: 0.69793","row: Chest<br>column: Fore<br>value: 0.52877","row: Chest<br>column: Wrist<br>value: 0.64291"],["row: Neck<br>column: Bodyfat<br>value: 0.46478","row: Neck<br>column: Age<br>value: 0.16144","row: Neck<br>column: Weight<br>value: 0.77653","row: Neck<br>column: Height<br>value: 0.32875","row: Neck<br>column: Neck<br>value: 1.00000","row: Neck<br>column: Chest<br>value: 0.72333","row: Neck<br>column: Abdo<br>value: 0.69105","row: Neck<br>column: Hip<br>value: 0.67829","row: Neck<br>column: Thigh<br>value: 0.63694","row: Neck<br>column: Knee<br>value: 0.61183","row: Neck<br>column: Ankle<br>value: 0.54685","row: Neck<br>column: Bic<br>value: 0.68511","row: Neck<br>column: Fore<br>value: 0.62292","row: Neck<br>column: Wrist<br>value: 0.75856"],["row: Height<br>column: Bodyfat<br>value: 0.15138","row: Height<br>column: Age<br>value: -0.14567","row: Height<br>column: Weight<br>value: 0.55670","row: Height<br>column: Height<br>value: 1.00000","row: Height<br>column: Neck<br>value: 0.32875","row: Height<br>column: Chest<br>value: 0.27383","row: Height<br>column: Abdo<br>value: 0.28551","row: Height<br>column: Hip<br>value: 0.45933","row: Height<br>column: Thigh<br>value: 0.36334","row: Height<br>column: Knee<br>value: 0.56529","row: Height<br>column: Ankle<br>value: 0.43874","row: Height<br>column: Bic<br>value: 0.29998","row: Height<br>column: Fore<br>value: 0.29595","row: Height<br>column: Wrist<br>value: 0.27245"],["row: Weight<br>column: Bodyfat<br>value: 0.66153","row: Weight<br>column: Age<br>value: 0.03626","row: Weight<br>column: Weight<br>value: 1.00000","row: Weight<br>column: Height<br>value: 0.55670","row: Weight<br>column: Neck<br>value: 0.77653","row: Weight<br>column: Chest<br>value: 0.89169","row: Weight<br>column: Abdo<br>value: 0.88518","row: Weight<br>column: Hip<br>value: 0.93299","row: Weight<br>column: Thigh<br>value: 0.83565","row: Weight<br>column: Knee<br>value: 0.82245","row: Weight<br>column: Ankle<br>value: 0.73210","row: Weight<br>column: Bic<br>value: 0.78243","row: Weight<br>column: Fore<br>value: 0.61700","row: Weight<br>column: Wrist<br>value: 0.70468"],["row: Age<br>column: Bodyfat<br>value: 0.25437","row: Age<br>column: Age<br>value: 1.00000","row: Age<br>column: Weight<br>value: 0.03626","row: Age<br>column: Height<br>value: -0.14567","row: Age<br>column: Neck<br>value: 0.16144","row: Age<br>column: Chest<br>value: 0.21664","row: Age<br>column: Abdo<br>value: 0.24349","row: Age<br>column: Hip<br>value: -0.03808","row: Age<br>column: Thigh<br>value: -0.20937","row: Age<br>column: Knee<br>value: 0.09194","row: Age<br>column: Ankle<br>value: -0.06345","row: Age<br>column: Bic<br>value: -0.07292","row: Age<br>column: Fore<br>value: -0.05128","row: Age<br>column: Wrist<br>value: 0.30484"],["row: Bodyfat<br>column: Bodyfat<br>value: 1.00000","row: Bodyfat<br>column: Age<br>value: 0.25437","row: Bodyfat<br>column: Weight<br>value: 0.66153","row: Bodyfat<br>column: Height<br>value: 0.15138","row: Bodyfat<br>column: Neck<br>value: 0.46478","row: Bodyfat<br>column: Chest<br>value: 0.73794","row: Bodyfat<br>column: Abdo<br>value: 0.83825","row: Bodyfat<br>column: Hip<br>value: 0.62734","row: Bodyfat<br>column: Thigh<br>value: 0.52256","row: Bodyfat<br>column: Knee<br>value: 0.48761","row: Bodyfat<br>column: Ankle<br>value: 0.35953","row: Bodyfat<br>column: Bic<br>value: 0.49151","row: Bodyfat<br>column: Fore<br>value: 0.34038","row: Bodyfat<br>column: Wrist<br>value: 0.42009"]],"colorscale":[[0,"#FFC9C9"],[0.052672496985855,"#FFD9D9"],[0.112832582005641,"#FFECEC"],[0.12066293259172,"#FFEEEE"],[0.130723248326702,"#FFF1F1"],[0.141637775954217,"#FEF2F2"],[0.203108287510713,"#F7F7F9"],[0.249148752304993,"#EEF3F8"],[0.298295139519881,"#E2ECF3"],[0.306614031237599,"#E0EBF3"],[0.352258039639758,"#D6E4EF"],[0.374464501543047,"#D2E0EC"],[0.383462054117047,"#D0DFEC"],[0.398404375078238,"#CCDDEA"],[0.39954675195937,"#CCDDEA"],[0.409206675529042,"#CADBE9"],[0.417840904995398,"#C8DAE9"],[0.42117444681385,"#C7D9E8"],[0.42519287896855,"#C6D8E8"],[0.44496186614524,"#C2D5E6"],[0.454579778056724,"#BFD4E5"],[0.470412164127523,"#BCD1E4"],[0.47356200983191,"#BBD1E3"],[0.520488217443768,"#B1CADF"],[0.535911267887929,"#ADC7DE"],[0.548039573381677,"#ABC5DD"],[0.5529316227882,"#AAC5DC"],[0.557441596310206,"#A9C4DC"],[0.566276477363734,"#A7C3DB"],[0.576316172777821,"#A4C1DA"],[0.579544624668937,"#A4C1DA"],[0.599285566355194,"#9FBED8"],[0.603565924700976,"#9EBDD7"],[0.605220298099796,"#9EBDD7"],[0.610355432862422,"#9DBCD7"],[0.611289707125732,"#9CBCD7"],[0.620785493779628,"#9ABBD6"],[0.621909177467968,"#9ABAD6"],[0.625298168766987,"#99BAD5"],[0.628456754073621,"#98B9D5"],[0.629022111005089,"#98B9D5"],[0.630560718677412,"#98B9D5"],[0.633444457725067,"#97B9D5"],[0.64054816852424,"#96B8D4"],[0.657895559245597,"#92B5D2"],[0.67782818022503,"#8DB2D1"],[0.679036217274322,"#8DB2D0"],[0.683310961942725,"#8CB1D0"],[0.688199017689849,"#8BB1D0"],[0.6895102787714,"#8BB0D0"],[0.691857217045358,"#8AB0CF"],[0.695066196556442,"#8AB0CF"],[0.699582145268417,"#89AFCF"],[0.699792159307258,"#89AFCF"],[0.703350503083516,"#88AECE"],[0.704729427675962,"#88AECE"],[0.71683680136541,"#85ACCD"],[0.719804420733728,"#84ACCD"],[0.720124201471456,"#84ACCD"],[0.733984914054102,"#81AACB"],[0.734008100124431,"#81AACB"],[0.734904091770663,"#81AACB"],[0.73865529978234,"#80A9CB"],[0.739625596142456,"#80A9CB"],[0.741368631444644,"#7FA9CB"],[0.744534928541108,"#7FA8CA"],[0.750228193875699,"#7DA7CA"],[0.751689763617125,"#7DA7CA"],[0.755805391839332,"#7CA6C9"],[0.765126438523911,"#7AA5C9"],[0.771230868679397,"#79A4C8"],[0.778477996057023,"#77A3C7"],[0.783308162891277,"#76A2C7"],[0.784100317329133,"#76A2C7"],[0.786945139929237,"#75A2C7"],[0.795012548437261,"#73A1C6"],[0.800360094887168,"#72A0C5"],[0.813749249903182,"#6F9EC4"],[0.815221227580148,"#6F9EC4"],[0.820094185464628,"#6D9DC4"],[0.824773675839879,"#6C9CC3"],[0.836051917376652,"#6A9AC2"],[0.853186908355778,"#6698C0"],[0.864103314565272,"#6496BF"],[0.86625659444637,"#6396BF"],[0.872672680955329,"#6295BF"],[0.893954895067427,"#5D92BD"],[0.905059998966015,"#5B90BC"],[0.910443027121432,"#5A8FBB"],[0.925483362341079,"#568DBA"],[0.944591390591219,"#528AB8"],[1,"#4682B4"]],"type":"heatmap","showscale":false,"autocolorscale":false,"showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[1],"y":[1],"name":"99_5229fec3c2f67fe4abdfd5d3b3e5442a","type":"scatter","mode":"markers","opacity":0,"hoverinfo":"skip","showlegend":false,"marker":{"color":[0,1],"colorscale":[[0,"#FF0000"],[0.0526315789473684,"#FF1A1A"],[0.105263157894737,"#FF3535"],[0.157894736842105,"#FF5050"],[0.210526315789474,"#FF6B6B"],[0.263157894736842,"#FF8686"],[0.315789473684211,"#FFA1A1"],[0.368421052631579,"#FFBBBB"],[0.421052631578947,"#FFD6D6"],[0.473684210526316,"#FFF1F1"],[0.526315789473684,"#F5F8FB"],[0.578947368421053,"#E1EBF3"],[0.631578947368421,"#CEDEEB"],[0.684210526315789,"#BAD0E3"],[0.736842105263158,"#A7C3DB"],[0.789473684210526,"#93B6D3"],[0.842105263157895,"#80A9CB"],[0.894736842105263,"#6C9CC3"],[0.947368421052632,"#598FBB"],[1,"#4682B4"]],"colorbar":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.88976377952756,"thickness":23.04,"title":null,"titlefont":{"color":"rgba(0,0,0,1)","family":"","size":14.6118721461187},"tickmode":"array","ticktext":["-1.0","-0.5","0.0","0.5","1.0"],"tickvals":[0,0.25,0.5,0.75,1],"tickfont":{"color":"rgba(0,0,0,1)","family":"","size":11.689497716895},"ticklen":2,"len":0.5}},"xaxis":"x","yaxis":"y","frame":null}],"layout":{"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[0.5,14.5],"tickmode":"array","ticktext":["Bodyfat","Age","Weight","Height","Neck","Chest","Abdo","Hip","Thigh","Knee","Ankle","Bic","Fore","Wrist"],"tickvals":[1,2,3,4,5,6,7,8,9,10,11,12,13,14],"categoryorder":"array","categoryarray":["Bodyfat","Age","Weight","Height","Neck","Chest","Abdo","Hip","Thigh","Knee","Ankle","Bic","Fore","Wrist"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.65296803652968,"tickwidth":0.66417600664176,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":13.2835201328352},"tickangle":-45,"showline":true,"linecolor":"rgba(0,0,0,1)","linewidth":0.66417600664176,"showgrid":false,"gridcolor":null,"gridwidth":0,"zeroline":false,"anchor":"y","title":"","hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[0.5,14.5],"tickmode":"array","ticktext":["Wrist","Fore","Bic","Ankle","Knee","Thigh","Hip","Abdo","Chest","Neck","Height","Weight","Age","Bodyfat"],"tickvals":[1,2,3,4,5,6,7,8,9,10,11,12,13,14],"categoryorder":"array","categoryarray":["Wrist","Fore","Bic","Ankle","Knee","Thigh","Hip","Abdo","Chest","Neck","Height","Weight","Age","Bodyfat"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.65296803652968,"tickwidth":0.66417600664176,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":13.2835201328352},"tickangle":-0,"showline":true,"linecolor":"rgba(0,0,0,1)","linewidth":0.66417600664176,"showgrid":false,"gridcolor":null,"gridwidth":0,"zeroline":false,"anchor":"x","title":"","hoverformat":".2f"},"annotations":[],"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"images":[],"margin":{"t":0,"r":null,"b":70,"l":70},"paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.6118721461187},"showlegend":true,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.88976377952756,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.689497716895},"y":1,"yanchor":"top"},"hovermode":"closest","barmode":"relative","title":""},"attrs":{"9ae17f8afca6":{"fill":{},"text":{},"x":{},"y":{},"type":"heatmap"}},"source":"A","config":{"doubleClick":"reset","showSendToCloud":false,"displaylogo":false,"collaborate":false,"modeBarButtonsToRemove":["sendDataToCloud","select2d","lasso2d","autoScale2d","hoverClosestCartesian","hoverCompareCartesian","sendDataToCloud"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"subplot":true,"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ```r # library(d3heatmap) # d3heatmap(cor(bfat)) # d3heat = d3heatmap(cor(bfat)) # d3heat # htmlwidgets::saveWidget(d3heat, file = 'd3hm.html', selfcontained=TRUE) # cat('<iframe src="d3hm.html"> </iframe>') ``` --- ## Notation for linear regression models - .bold[.red[Data]]: `\(\mathbf{\color{red}{y}}=(y_1,\ldots,y_n)^\top\)` and an `\(n \times p\)` (design) matrix `\(\mathbf{\color{red}{X}}\)` - Index the columns of `\(\mathbf{X}\)` by `\(\{1,\ldots,p\} \Rightarrow \color{blue}{\alpha}\)` denotes any subset of `\(p_{\color{blue}{\alpha}}\)` distinct elements from `\(\{1,\ldots,p\}\)` - The .bold[.blue[linear regression model]] `\(\color{blue}{\alpha}\)` is `$$y_i = \mathbf{x}_{\color{blue}{\alpha} i}^\top \boldsymbol{\beta}_{\color{blue}{\alpha}} + \sigma_{\color{blue}{\alpha}}\epsilon_{\color{blue}{\alpha} i}, \,\,\,\, i = 1,\ldots, n$$` - `\(\mathbf{\beta}_{\color{blue}{\alpha}}\)` an unknown `\(p_{\color{blue}{\alpha}}\)`-vector of *regression parameters* - `\(\sigma_{\color{blue}{\alpha}}\)` an unknown *scale parameter* - `\(\mathbf{X}_{\color{blue}{\alpha}}\)` (design matrix of `\(\color{blue}{\alpha}\)`) and `\(\mathbf{\epsilon}_{\color{blue}{\alpha}} = (\epsilon_{\color{blue}{\alpha} 1},\ldots,\epsilon_{\color{blue}{\alpha} n})^\top\)` are independent, and the `\(\epsilon_{\color{blue}{\alpha} i}\)` are treated as having location zero and spread one --- ## Implicit assumptions - `\(\mathbf{y}\)` and `\(\mathbf{X}\)` are fully observed - in practice almost always violated! * `library(mice)` * `library(mi)` * `library(Amelia)` * etc - `\(p\)` and `\(n\)` are known/fixed - type of model and its assumptions are known - type of model component is assumed to be known (fixed, random) --- ## Possible linear regression models .content-box-yellow[.large[ **Purpose of model selection:** choose one or more models `\(\alpha \in \mathcal{A}\)` with specified desirable properties ]] - `\(\mathcal{A}\)` denotes a set of possible models (choices of variables) - `\(\mathcal{A}\)` is either appropriately reduced or the .red[power set] ### Power set when `\(p=3\)` - There are `\(M = \#\mathcal{A} = 2^3 = 8\)` distinct subsets of `\(\{1,2,3\}\)` - `\(p_{\alpha} = 1\)`: `y~1` - `\(p_{\alpha} = 2\)`: `y~x1`, `y~x2`, `y~x3` - `\(p_{\alpha} = 3\)`: `y~x1+x2`, `y~x1+x3`, `y~x2+x3`, and - `\(p_{\alpha} = 4\)`: `y~x1+x2+x3` --- class: eg ## Body fat data: Full model and power set ```r names(bfat) ``` ``` ## [1] "Bodyfat" "Age" "Weight" "Height" "Neck" "Chest" ## [7] "Abdo" "Hip" "Thigh" "Knee" "Ankle" "Bic" ## [13] "Fore" "Wrist" ``` .bold[.red[Full model]] `$$\begin{align*} \text{Bodyfat} = & \beta_1 + \beta_2\text{Age} + \beta_3\text{Weight}+ \beta_4\text{Height} + \beta_5\text{Height} + {} \\ & \beta_6\text{Chest} + \beta_7\text{Abdo} + \beta_8\text{Hip} + \beta_9\text{Thigh} + \beta_{10}\text{Knee} + {} \\ & \beta_{11}\text{Ankle} + \beta_{12}\text{Bic} + \beta_{13}\text{Fore} + \beta_{14}\text{Wrist} + \epsilon \end{align*}$$` .bold[.red[Power set]] Here, the intercept is not subject to selection. Therefore, `\(p-1 = 13\)` and thus the number of possible regression models is: `$$M = \#\mathcal{A} = 2^{13} = 8192$$` --- ## True model, correct models and wrong models - Let `\(\alpha_0\)` denote the .red[true model], that model which actually generated the data (this is completely hypothetical) - Model selection procedures are intended to identify useful models whether or not a true model exists - A .red[correct model] is any model satisfying `\(\alpha \supseteq \alpha_0\)` and elements in the complement of the correct models are .red[wrong models] --- class: eg code90 ## Body fat: Best bivariate model ```r M0 = lm(Bodyfat ~ Abdo, data = bfat) summary(M0) ``` ``` ## ## Call: ## lm(formula = Bodyfat ~ Abdo, data = bfat) ## ## Residuals: ## Min 1Q Median 3Q Max ## -10.3542 -2.9928 0.2191 2.4967 10.0106 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -43.19058 3.63431 -11.88 <2e-16 *** ## Abdo 0.67411 0.03907 17.26 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 4.249 on 126 degrees of freedom ## Multiple R-squared: 0.7027, Adjusted R-squared: 0.7003 ## F-statistic: 297.8 on 1 and 126 DF, p-value: < 2.2e-16 ``` --- class: eg ## Body fat: Best bivariate model ```r par(mfrow = c(2, 2)) plot(M0, which = c(1, 2, 3, 4)) ``` <!-- --> --- class: eg ## Body fat: Best bivariate model ```r library("ggfortify") autoplot(M0, which = 1:4) + theme_bw(base_size = 18) ``` <!-- --> --- class: eg code80 ## Body fat: Summary for the full model .scroll-output[ ```r M1 = lm(Bodyfat ~ ., data = bfat) summary(M1) ``` ``` ## ## Call: ## lm(formula = Bodyfat ~ ., data = bfat) ## ## Residuals: ## Min 1Q Median 3Q Max ## -9.3767 -2.5514 -0.1723 2.6391 9.1393 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -52.553646 40.062856 -1.312 0.1922 ## Age 0.009288 0.043470 0.214 0.8312 ## Weight -0.271016 0.243569 -1.113 0.2682 ## Height 0.258388 0.320810 0.805 0.4223 ## Neck -0.592669 0.322125 -1.840 0.0684 . ## Chest 0.090883 0.164738 0.552 0.5822 ## Abdo 0.995184 0.123072 8.086 7.29e-13 *** ## Hip -0.141981 0.204533 -0.694 0.4890 ## Thigh 0.101272 0.200714 0.505 0.6148 ## Knee -0.096682 0.325889 -0.297 0.7673 ## Ankle -0.048017 0.507695 -0.095 0.9248 ## Bic 0.075332 0.244105 0.309 0.7582 ## Fore 0.412107 0.272144 1.514 0.1327 ## Wrist -0.263067 0.745145 -0.353 0.7247 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 4.081 on 114 degrees of freedom ## Multiple R-squared: 0.7519, Adjusted R-squared: 0.7236 ## F-statistic: 26.57 on 13 and 114 DF, p-value: < 2.2e-16 ``` ] --- class: eg ## Body fat: Diagnostics for the full model ```r par(mfrow = c(2, 2)) plot(M1, which = c(1, 2, 3, 4), add.smooth = FALSE) ``` <!-- --> --- class: eg ## Body fat: Diagnostics for the full model ```r autoplot(M1, which = 1:4) + theme_bw(base_size = 18) ``` <!-- --> --- class: eg ## Body fat: Model selection .content-box-yellow[.large[ **Aim:** to pick the best (or at least a good) model somewhere between the null model and the full model ]] - This example will be investigated in Lab 1 --- class: segue # "Classical" model selection criteria --- ## New concepts - Adjusted `\(R^2\)` - Akaike's information criterion (AIC) - Bayesian information criterion (BIC) - Selected R functions: `poly`, `logLik`, `I`, `which.min`, `AIC(...,k=log(n))`, - Complexity against AIC plot - Polynomial regression - Stepwise procedures --- class: eg ## Child lung function data .pull-left[ - FEV (forced expiratory volume) is a measure of lung function - The data include determinations of FEV on 318 female children who were seen in a childhood respiratory disease study in Massachusetts, USA - Child's age (in years) also recorded - Of interest to model FEV by age ] .pull-right[ <!-- <img src=fev.jpg width=400 /> --> <img src="imgs/fev.jpg" width="1097" /> ] .footnote[Source: Tager, Weiss, Rosner, and Speizer (1979)] --- class: eg ## Lung data: Scatterplot ```r data(fev, package = "mplot") # to obtain access to fev data set fem.fev = subset(fev, subset = sex == 0) plot(fev ~ age, data = fem.fev, pch = 19, col = gray(0.3, alpha = 0.5)) ``` <!-- --> - Relationship between FEV and age is .red[not linear] - Will try to model FEV as a .red[polynomial] in age --- ## `\(R^2\)` and `\(R^2_a\)` - The `\(\color{red}{R^{2}}\)` in a linear model `\(\alpha\)` is the proportion of variation in the dependent variable explained by the model: `$$\color{red}{R^2(\alpha)} = 1 - \frac{\text{Sum of squared residuals}}{\text{Total sum of squares}} = 1- \frac{\sum_i (y_i - \hat{y}_i)^2}{\sum_i (y_i - \bar{y}_i)^2}$$` - As the number of variables increases, the `\(\color{red}{R^{2}}\)` will .red[never decrease] - To compare between models of different sizes, one approach is to consider the .blue[adjusted] `\(\color{blue}{R^2}\)` which penalises for the size, `\(p_{\alpha}\)` of the model `\(\alpha\)`: `$$\color{blue}{R^2_a(\alpha)} = 1- \frac{\text{RSS}/\color{blue}{(n-p_{\alpha})}}{\sum_i (y_i - \bar{y}_i)^2/\color{blue}{(n-1)}}$$` --- class: eg ## Lung data: `\(R^2\)` values .pull-left-2[ ```r y = fem.fev$fev x = fem.fev$age length(unique(x)) ``` ``` ## [1] 17 ``` A total of 17 disjoint x values. ```r r2 = ar2 = NULL for (d in 1:16) { fit = lm(y ~ poly(x, degree = d)) r2[d] = summary(fit)$r.squared ar2[d] = summary(fit)$adj.r.squared } ``` ] .pull-right-1[ ```r res = cbind(r2, ar2) round(res, 3) ``` ``` ## r2 ar2 ## [1,] 0.546 0.545 ## [2,] 0.621 0.618 ## [3,] 0.622 0.618 ## [4,] 0.640 0.636 ## [5,] 0.641 0.635 ## [6,] 0.642 0.635 ## [7,] 0.642 0.634 ## [8,] 0.642 0.633 ## [9,] 0.644 0.634 ## [10,] 0.646 0.634 ## [11,] 0.648 0.636 ## [12,] 0.649 0.635 ## [13,] 0.649 0.634 ## [14,] 0.650 0.634 ## [15,] 0.650 0.633 ## [16,] 0.650 0.632 ``` ] --- class: eg ## Lung data: `\(R^2\)` plot ```r plot(1:16, r2, type = "l", xlab = "Degree of the polynomial (d)", ylab = expression(R^2 ~ value), lwd = 2) abline(h = max(r2), lty = 2, col = "blue") abline(v = which.max(r2), lty = 2, col = "blue") ``` <!-- --> --- class: eg ## Lung data: Adjusted `\(R^2\)` plot ```r plot(1:16, ar2, type = "l", xlab = "Degree of the polynomial (d)", ylab = expression(Adjusted ~ R^2 ~ value), lwd = 2) abline(h = max(ar2), lty = 2, col = "blue") abline(v = which.max(ar2), lty = 2, col = "blue") ``` <!-- --> --- class: eg ## Lung data: Degree 11 polynomial model .pull-left-2[.small[ ```r fit = lm(y ~ x + I(x^2) + I(x^3) + I(x^4) + I(x^5) + I(x^6) + I(x^7) +I(x^8) + I(x^9) + I(x^10) +I(x^11)) round(summary(fit)$coef, 3) ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -480.609 390.589 -1.230 0.219 ## x 689.880 550.204 1.254 0.211 ## I(x^2) -430.833 337.479 -1.277 0.203 ## I(x^3) 155.234 119.265 1.302 0.194 ## I(x^4) -35.936 27.048 -1.329 0.185 ## I(x^5) 5.625 4.143 1.357 0.176 ## I(x^6) -0.609 0.438 -1.388 0.166 ## I(x^7) 0.046 0.032 1.420 0.157 ## I(x^8) -0.002 0.002 -1.452 0.147 ## I(x^9) 0.000 0.000 1.485 0.138 ## I(x^10) 0.000 0.000 -1.518 0.130 ## I(x^11) 0.000 0.000 1.551 0.122 ``` ```r summary(fit)$r.square ``` ``` ## [1] 0.6484668 ``` ```r summary(fit)$adj.r.square ``` ``` ## [1] 0.6358299 ``` ]] .pull-right-1[.small[ ```r library(dplyr) broom::glance(fit) %>% round(2) %>% t() %>% knitr::kable(format = "html") ``` <table> <tbody> <tr> <td style="text-align:left;"> r.squared </td> <td style="text-align:right;"> 0.65 </td> </tr> <tr> <td style="text-align:left;"> adj.r.squared </td> <td style="text-align:right;"> 0.64 </td> </tr> <tr> <td style="text-align:left;"> sigma </td> <td style="text-align:right;"> 0.39 </td> </tr> <tr> <td style="text-align:left;"> statistic </td> <td style="text-align:right;"> 51.32 </td> </tr> <tr> <td style="text-align:left;"> p.value </td> <td style="text-align:right;"> 0.00 </td> </tr> <tr> <td style="text-align:left;"> df </td> <td style="text-align:right;"> 12.00 </td> </tr> <tr> <td style="text-align:left;"> logLik </td> <td style="text-align:right;"> -145.41 </td> </tr> <tr> <td style="text-align:left;"> AIC </td> <td style="text-align:right;"> 316.83 </td> </tr> <tr> <td style="text-align:left;"> BIC </td> <td style="text-align:right;"> 365.73 </td> </tr> <tr> <td style="text-align:left;"> deviance </td> <td style="text-align:right;"> 46.47 </td> </tr> <tr> <td style="text-align:left;"> df.residual </td> <td style="text-align:right;"> 306.00 </td> </tr> </tbody> </table> ]] --- class: eg ## Lung data: Visualising the fitted model ```r plot(fev ~ age, data = fem.fev, pch = 19, col = gray(0.3, alpha = 0.5)) newdat = seq(0, 20, 0.01) predat = predict(fit, data.frame(x = newdat)) lines(predat ~ newdat, lty = 1, lwd = 2, col = "blue") ``` <!-- --> --- ## Selection criteria - Consider .bold[model selection methods] which choose models by minimizing an expression that can be written as `$$\text{Loss + Penalty}$$` - Many possible choices for both of these terms --- ## The loss **Classical choices:** - Minus twice the log-likelihood, `\(-2 \times \text{LogLik}(\alpha)\)` - In the normal case, this is equivalent to using RSS - This loss function is often applied when `\(\sigma^2\)` is unknown by estimating `\(\sigma^2\)` from a fixed, .red[baseline model] `\(\alpha_f\)` - The fixed model typically has large column rank `\(p_{\alpha_f}\)` and is often chosen to be the full model `\(p_{\alpha_f} = p\)` which contains all `\(p\)` columns of `\(\mathbf{X}\)` -- **Other choices include:** log-quasilikelihoods; least squares; `\(L_1\)` and other loss functions optimized in parameter estimation. Robust versions of these functions, e.g. Ronchetti and Staudte (1994), Konishi and Kitagawa (1996), Müller and Welsh (2005) and Müller and Welsh (2009) --- ## The penalty ### Simplest choices <!-- Note the span is left in the nested list below, because there seem to be issues with the dotclass notation in nested lists. --> - Simple penalties are of the form `\(\color{blue}{\lambda_n} \color{red}{f_n(p_{\alpha})}\)` - `\(\color{blue}{\lambda_n}\)` is a <span class="blue">penalty multiplier</span> (known, non-stochastic sequence) - `\(\color{red}{f_n}\)` is a <span class="red">penalty function</span> (known, non-stochastic sequence of `\(p_{\alpha}\)`) - Simplest choice for the penalty function is `\(f_n(p)=p + \text{constant}\)` - .bold[.blue[AIC]] penalty multiplier `\(\lambda_n=\color{blue}{2}\)` (Akaike, 1973; Mallows, 1973) - .blue[stepwise test] penalty multiplier `\(\lambda_n \approx \color{blue}{4}\)` - more generally, `\(\lambda_n \equiv \lambda\)` (Shibata, 1984) --- ## The penalty ### Simple choices - The penalty multiplier can depend on `\(p_{\alpha_f}\)` - e.g. Risk Inflation Criterion (RIC) with `\(\lambda_n=2\log(p_{\alpha_f})\)` (Foster and George, 1994) - The penalty multiplier can depend on `\(n\)` - `\(\lambda_n = c \log\log(n)\)` (Hannan and Quinn, 1979), - `\(\lambda_n/n \rightarrow 0\)` and `\(\lambda_n/\log\log(n) \rightarrow \infty\)` as `\(n\rightarrow \infty\)` (Zhao, Krishnaiah, and Bai, 1986; Rao and Wu, 1989); - and the well-known <span class = "bold blue">BIC</span> with `\(\lambda_n = \color{blue}{\log(n)}\)` (Schwarz, 1978) - There are a large number of other choices of `\(f_n(p)\)`, e.g. `$$f_n(p) = \frac{n(p+1)}{(n-p-2)}$$` used with `\(\lambda_n=2\)` by Sugiura (1978) and Hurvich and Tsai (1989) --- ## Other penalties We can penalize the parameters themselves using `\(f_n(\beta_{\alpha})\)`, e.g. the .red[ridge penalty], `$$f_n(\beta) = \sum_{j=1}^{p}\beta_j^2$$` or the .red[lasso penalty], `$$f_n(\beta) = \sum_{j=1}^{p}|\beta_j|.$$` Various other penalties, which are generally related to the choice of "Loss" and so tend to be used in rather specific criteria, e.g. - Takeuchi Information Criterion (Takeuchi, 1976) - robust `\(C_p\)` penalty (Ronchetti and Staudte, 1994) --- ## Optimality comes in many ways - .bold[.red[AIC]] penalty `\(2p_{\alpha}\)` .bold[.red[minimizes the Kullback-Leibler distance between the model and the true density]] (Akaike, 1973) - .bold[.blue[BIC]] penalty `\(\log(n)p_{\alpha}\)` .bold[.blue[optimizes the posterior probability]] of the model --- ## Consistency .content-box-yellow[.large[ **Desirable property:** consistent selection of the true model `\(\alpha_0\)`, the model which actually generated the data. ]] - Usually `\(\alpha_0 \subseteq \alpha_f = \{1,\ldots,p_{\alpha_f}\}\)` - If `\(p_{\alpha_0}\)` and `\(p_{\alpha_f}\)` are fixed, we get consistent model selection when `\(\lambda_n \rightarrow \infty\)` such that `\(\lambda_n/n \rightarrow 0\)` as `\(n \rightarrow \infty\)` and we do not when `\(\lambda_n \rightarrow c\)` for some constant `\(c\)` - On the other hand, if `\(p_{\alpha_0} \rightarrow \infty\)` then the results are reversed - .bold[.red[The optimal choice of penalty depends on the true model]] - Yang (2005): <span class="red">Adaptive compromise</span> between AIC and BIC penalty - simultaneously consistency and minimax optimality - <span class="red">is not possible</span> - Asymptotic theory well presented in Shao (1997) --- ## The Akaike information criterion - AIC is the most widely known and used model selection method (of course this does not imply it is the best/recommended method to use) - The AIC was introduced by Hirotugu Akaike in his seminal 1973 paper and is often defined as `$$\color{red}{\text{AIC}}(\color{blue}{\alpha}) = -2\times \text{LogLik}(\color{blue}{\alpha}) + \color{red}{2} \times p_{\color{blue}{\alpha}}$$` - The smaller the AIC the better the model - When computing the criterion value across different distributions, no constants must be discarded from the goodness-of-fit term - Models that differ by less than one or two AIC values can be regarded as somewhat equally well fitting --- ## Relationship to resampling procedures - .orange[Cross-validation] and the .orange[bootstrap] - to measure the uncertainty of estimated best models obtained from other model selection procedures or .red[model selection procedures in their own right] - Shao (1993, 1996, 1997), Müller and Welsh (2005, 2009) and others: - leave-one-out CV or `\(n\)` -out-of- `\(n\)` bootstrap are approx as AIC - `\(m\)` -out-of- `\(n\)` versions with `\(m/n \rightarrow 0\)` ( `\(m,n \rightarrow \infty\)` ) are approx as BIC - Additionally, .red[stratified resampling] to control the proportion of outliers in the bootstrap samples and ensure that these samples mimic the original data better --- ## Bayesian information criterion (BIC) - The BIC, introduced by Schwarz (1978), is given by `$$\color{red}{\text{BIC}}(\color{blue}{\alpha}) = -2 \times \text{LogLik}(\color{blue}{\alpha}) + \color{red}{\log(n)}\times p_{\color{blue}{\alpha}}$$` - It was derived to serve as an asymptotic approximation to a transformation of the Bayesian posterior probability of a candidate model - The BIC is also known as Schwarz information criterion - In large-sample settings, the fitted model favoured by .red[BIC] ideally corresponds to the candidate model which .red[optimises the posterior probability] - the model that is the most plausible given the observed data - Computation of BIC is based on the empirical log-likelihood and .red[does not require the specification of priors] - The .red[smaller] the BIC the .red[better] the model --- ## AIC vs BIC - .red[AIC] provides an asymptotically unbiased estimator of the expected .red[Kullback discrepancy] between the generating model and the fitted approximating model - .red[BIC] provides a large-sample estimator of a transformation of the Bayesian .red[posterior probability] associated with the approximating model - The penalty term of BIC is more stringent than the penalty term of AIC: for `\(n \geq 8\)`, `\(\log n\)` exceeds `\(2\)` <!-- --> - Consequently, BIC tends to favour smaller models than AIC --- class: eg ## Lung data: AIC values .pull-left-2[ ```r y = fem.fev$fev x = fem.fev$age length(unique(x)) ``` ``` ## [1] 17 ``` A total of 17 disjoint x values. Recall, - `\(\color{red}{\text{AIC}}(\color{blue}{\alpha}) = -2\times \text{LogLik}(\color{blue}{\alpha}) + \color{red}{2} \times p_{\color{blue}{\alpha}}\)` - `\(\color{red}{\text{BIC}}(\color{blue}{\alpha}) = -2 \times \text{LogLik}(\color{blue}{\alpha}) + \color{red}{\log(n)}\times p_{\color{blue}{\alpha}}\)` .smaller[ ```r aic = bic = NULL n = length(y) for (d in 1:16){ fit = lm(y ~ poly(x, degree = d)) # AIC(fit) aic[d] = -2*logLik(fit) + 2*(d+2) # AIC(fit, k = log(n)) bic[d] = -2*logLik(fit) + log(n)*(d+2) } ``` ]] .pull-right-1[ ```r round(cbind(aic, bic), 1) ``` ``` ## aic bic ## [1,] 378.1 389.4 ## [2,] 323.0 338.0 ## [3,] 324.2 343.0 ## [4,] 310.1 332.7 ## [5,] 311.4 337.7 ## [6,] 312.8 342.9 ## [7,] 314.7 348.6 ## [8,] 316.7 354.3 ## [9,] 316.8 358.2 ## [10,] 317.3 362.5 ## [11,] 316.8 365.7 ## [12,] 318.8 371.4 ## [13,] 320.7 377.1 ## [14,] 321.7 381.9 ## [15,] 323.3 387.3 ## [16,] 325.3 393.0 ``` ] --- class: eg ## Lung data: AIC plot ```r plot(1:16, aic, type = "l", xlab = "Degree of the polynomial (d)", ylab = "AIC value") abline(h = min(aic), lty = 2, col = "blue") abline(v = which.min(aic), lty = 2, col = "blue") ``` <!-- --> --- class: eg ## Lung data: BIC plot ```r plot(1:16, bic, type = "l", xlab = "Degree of the polynomial (d)", ylab = "BIC value") abline(h = min(bic), lty = 2, col = "blue") abline(v = which.min(bic), lty = 2, col = "blue") ``` <!-- --> --- class: eg ## Lung data: Degree 4 polynomial model ```r which.min(aic) ``` ``` ## [1] 4 ``` ```r fit = lm(y ~ x + I(x^2) + I(x^3) + I(x^4)) # summary(fit) round(summary(fit)$coef, 4) ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 3.5686 1.0434 3.4200 0.0007 ## x -1.3941 0.4529 -3.0782 0.0023 ## I(x^2) 0.2713 0.0692 3.9183 0.0001 ## I(x^3) -0.0182 0.0044 -4.0956 0.0001 ## I(x^4) 0.0004 0.0001 4.0285 0.0001 ``` ```r round(broom::glance(fit), 3) ``` ``` ## # A tibble: 1 x 11 ## r.squared adj.r.squared sigma statistic p.value df ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 0.64 0.636 0.39 139. 0 5 ## # … with 5 more variables: logLik <dbl>, AIC <dbl>, ## # BIC <dbl>, deviance <dbl>, df.residual <dbl> ``` --- class: eg ## Lung data: Notes on the fitted model ```r plot(fev ~ age, data = fem.fev, pch = 19, col = gray(0.3, alpha = 0.5)) curve(3.57 - 1.39 * x + 0.27 * x^2 - 0.018 * x^3 + 4e-04 * x^4, 0, 20, add = TRUE, lwd = 2) ``` <!-- --> - The fitted model looks adequate for ages in the range 5 to 15 years - At the extreme ends of the plot the fitted curve exhibits spurious upward curvature - Form of the fitted polynomial is an artefact rather than a characteristic of the data --- class: segue # Stepwise search schemes --- ## Automated stepwise variable selection algorithms... `\(\ldots\)` or bushwalking in `\(\mathcal{A}\)` ### "Walk along" the following "path": - Choose a model to start with, e.g. - the model with no covariates (<span class = "red bold">null model</span>) - or the model with all covariates included (<span class = "red bold">full model</span>) - .bold[.red[Test]] to see if there is an advantage in adding or removing covariates - .bold[.red[Repeat]] adding/removing variables until there is no advantage in changing the model - Such a strategy typically requires to visit at most a quadratic order of number of models! - Works for very large `\(p\)` as long as `\(p < n\)` and can work in special scenarios when `\(p>n\)` if starting with the null model --- class: eg background-image: url("imgs/burglar.jpg") background-position: 100% 100% background-size: 50% 100% ## US crime rate data .pull-left[ - This example concerns famous crime data from 47 states of the USA in 1960 - Our aim is to model the crime rate as a function of up to 15 potential explanatory variables - We will consider stepwise search schemes ] .pull-right[ ] <!-- <img src=burglar.jpg width=400 /> --> <!-- <img src="imgs/burglar.jpg" width="480" /> --> .footnote[Source: Ehrlich (1973)] --- class: eg ## Crime data: Variables in the data set .font75[ Variable | Description ------ | ------ M | percentage of males aged 14-24 So | indicator variable for a southern state Ed | mean years of schooling Po1 | police expenditure in 1960 Po2 | police expenditure in 1959 LF | labour force participation rate M.F | number of males per 1000 females Pop | state population NW | number of nonwhites per 1000 people U1 | unemployment rate of urban males 14-24 U2 | unemployment rate of urban males 35-39 GDP | gross domestic product per head Ineq | income inequality Prob | probability of imprisonment Time | average time served in state prisons y | rate of crimes in a particular category per head of population ] --- class: eg ## Crime data ```r data("UScrime", package = "MASS") dim(UScrime) ``` ``` ## [1] 47 16 ``` ```r n = 47 k = 15 2^k ``` ``` ## [1] 32768 ``` ```r # pairs(UScrime) ``` --- class: eg code80 ## Crime data: Null and full model .pull-left-2[ ```r M0 = lm(y ~ 1, data = UScrime) # Null model M1 = lm(y ~ ., data = UScrime) # Full model round(summary(M1)$coef, 3) ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -5984.288 1628.318 -3.675 0.001 ## M 8.783 4.171 2.106 0.043 ## So -3.803 148.755 -0.026 0.980 ## Ed 18.832 6.209 3.033 0.005 ## Po1 19.280 10.611 1.817 0.079 ## Po2 -10.942 11.748 -0.931 0.359 ## LF -0.664 1.470 -0.452 0.655 ## M.F 1.741 2.035 0.855 0.399 ## Pop -0.733 1.290 -0.568 0.574 ## NW 0.420 0.648 0.649 0.521 ## U1 -5.827 4.210 -1.384 0.176 ## U2 16.780 8.234 2.038 0.050 ## GDP 0.962 1.037 0.928 0.361 ## Ineq 7.067 2.272 3.111 0.004 ## Prob -4855.266 2272.375 -2.137 0.041 ## Time -3.479 7.165 -0.486 0.631 ``` ] .pull-right-1[ .pull-down[ ```r t(round(broom::glance(M1), 2)) ``` ``` ## [,1] ## r.squared 0.80 ## adj.r.squared 0.71 ## sigma 209.06 ## statistic 8.43 ## p.value 0.00 ## df 16.00 ## logLik -308.01 ## AIC 650.03 ## BIC 681.48 ## deviance 1354945.77 ## df.residual 31.00 ``` ] ] --- ## The `drop1` and `update` command in R - For a response variable `Y` and explanatory variables `x1`, `...`, `xk` stored in the data frame `dat` consider - `M1 = lm(Y ~ ., data = dat)` - The function `drop1(M1, test = "F")` returns a number of information criteria for all explanatory variables used in `M1` - In particular, this includes the p-values of the F-test for `\(H_0: \beta_j = 0\)` versus `\(H_1: \beta_j \neq 0\)` for all `\(j = 1,\ldots,k\)` - To efficiently delete a variable from regression model `M1`, say `x1`, the `update()` function can be used: - `M2 = update(M1, . ~ . - x1)` - The full stops in the `update()` formula `.~.` stand for "whatever was in the corresponding position in the old formula" - The general syntax for updating models is: - `update(old.model, new.formula,...)` --- class: eg ## Crime data: `drop1` and `update` .pull-left[ Start with the full model, `M1` .code60[ ```r drop1(M1, test = "F") ``` ``` ## Single term deletions ## ## Model: ## y ~ M + So + Ed + Po1 + Po2 + LF + M.F + Pop + NW + U1 + U2 + ## GDP + Ineq + Prob + Time ## Df Sum of Sq RSS AIC F value Pr(>F) ## <none> 1354946 515 ## M 1 193770 1548716 519 4.43 0.0434 * ## So 1 29 1354974 513 0.00 0.9798 ## Ed 1 402117 1757063 525 9.20 0.0049 ** ## Po1 1 144306 1499252 517 3.30 0.0789 . ## Po2 1 37919 1392865 514 0.87 0.3588 ## LF 1 8917 1363862 513 0.20 0.6547 ## M.F 1 31967 1386913 514 0.73 0.3990 ## Pop 1 14122 1369068 513 0.32 0.5738 ## NW 1 18395 1373341 513 0.42 0.5213 ## U1 1 83722 1438668 515 1.92 0.1762 ## U2 1 181536 1536482 519 4.15 0.0502 . ## GDP 1 37613 1392558 514 0.86 0.3608 ## Ineq 1 423031 1777977 525 9.68 0.0040 ** ## Prob 1 199538 1554484 519 4.57 0.0406 * ## Time 1 10304 1365250 513 0.24 0.6307 ## --- ## Signif. codes: ## 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] Remove `So` and create `M2` ] .pull-right[ .code60[ ```r M2 = update(M1, . ~ . - So) drop1(M2, test = "F") ``` ``` ## Single term deletions ## ## Model: ## y ~ M + Ed + Po1 + Po2 + LF + M.F + Pop + NW + U1 + U2 + GDP + ## Ineq + Prob + Time ## Df Sum of Sq RSS AIC F value Pr(>F) ## <none> 1354974 513 ## M 1 195084 1550059 517 4.61 0.0395 * ## Ed 1 403140 1758114 523 9.52 0.0042 ** ## Po1 1 144302 1499277 515 3.41 0.0742 . ## Po2 1 37954 1392929 512 0.90 0.3509 ## LF 1 10878 1365852 511 0.26 0.6157 ## M.F 1 32449 1387423 512 0.77 0.3879 ## Pop 1 14127 1369101 511 0.33 0.5676 ## NW 1 21626 1376600 511 0.51 0.4800 ## U1 1 96420 1451395 514 2.28 0.1411 ## U2 1 189859 1544834 517 4.48 0.0421 * ## GDP 1 39223 1394197 512 0.93 0.3430 ## Ineq 1 488834 1843808 525 11.54 0.0018 ** ## Prob 1 204463 1559437 517 4.83 0.0353 * ## Time 1 10341 1365315 511 0.24 0.6246 ## --- ## Signif. codes: ## 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] Remove `Time` and create `M3` ```r M3 = update(M2,.~.-Time) ``` ] --- class: eg ## Crime data: `drop1` and `update` .pull-left[.code60[ ```r drop1(M3, test = "F") ``` ``` ## Single term deletions ## ## Model: ## y ~ M + Ed + Po1 + Po2 + LF + M.F + Pop + NW + U1 + U2 + GDP + ## Ineq + Prob ## Df Sum of Sq RSS AIC F value Pr(>F) ## <none> 1365315 511 ## M 1 186110 1551425 515 4.50 0.0415 * ## Ed 1 409448 1774763 521 9.90 0.0035 ** ## Po1 1 134137 1499452 513 3.24 0.0809 . ## Po2 1 28932 1394247 510 0.70 0.4090 ## LF 1 10533 1375848 509 0.25 0.6172 ## M.F 1 41784 1407099 510 1.01 0.3222 ## Pop 1 21846 1387161 510 0.53 0.4726 ## NW 1 15482 1380797 510 0.37 0.5449 ## U1 1 91420 1456735 512 2.21 0.1466 ## U2 1 184143 1549458 515 4.45 0.0426 * ## GDP 1 36070 1401385 510 0.87 0.3572 ## Ineq 1 502909 1868224 524 12.16 0.0014 ** ## Prob 1 237493 1602808 517 5.74 0.0224 * ## --- ## Signif. codes: ## 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] ```r M4 = update(M3, . ~ . - LF) ``` ] .pull-right[.code60[ ```r drop1(M4, test = "F") ``` ``` ## Single term deletions ## ## Model: ## y ~ M + Ed + Po1 + Po2 + M.F + Pop + NW + U1 + U2 + GDP + Ineq + ## Prob ## Df Sum of Sq RSS AIC F value Pr(>F) ## <none> 1375848 509 ## M 1 217716 1593564 514 5.38 0.0265 * ## Ed 1 413254 1789103 520 10.21 0.0030 ** ## Po1 1 123896 1499744 511 3.06 0.0892 . ## Po2 1 21418 1397266 508 0.53 0.4719 ## M.F 1 31252 1407100 508 0.77 0.3857 ## Pop 1 27803 1403651 508 0.69 0.4129 ## NW 1 11675 1387523 508 0.29 0.5947 ## U1 1 80954 1456802 510 2.00 0.1663 ## U2 1 190746 1566594 513 4.71 0.0370 * ## GDP 1 35035 1410883 509 0.87 0.3587 ## Ineq 1 500944 1876792 522 12.38 0.0013 ** ## Prob 1 226971 1602819 515 5.61 0.0237 * ## --- ## Signif. codes: ## 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] ```r M5 = update(M4, . ~ . - NW) drop1(M5, test = "F") ``` and so on... ] --- ## Backward variable selection 1. Start with model containing .red[all] possible explanatory variables 2. For each variable in turn, investigate effect of removing variable from current model 3. .red[Remove the least informative variable], unless this variable is nonetheless supplying significant information about the response 4. Go to step 2. Stop only if all variables in the current model are important ### Comments - Implementation depends on how we assess the importance of variables - .red[Possible criteria/tests]: use information criteria (AIC, BIC, Mallows Cp etc), goodness of fit criteria such as the adjusted `\(R^2\)`, or resampling based criteria - either using CV or the Bootstrap, as we will see later in the course, or use p-values (e.g. from `\(F\)`, `\(t\)` or `\(\chi^2\)` tests) --- class: eg ## Crime data: Backward search using BIC ```r step.back.bic = step(M1, scope = list(lower = M0, upper = M1), direction = "backward", trace = FALSE, # trace = TRUE will print all steps k = log(47)) # k = log(n) for BIC round(summary(step.back.bic)$coef,3) ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -5040.505 899.843 -5.602 0.000 ## M 10.502 3.330 3.154 0.003 ## Ed 19.647 4.475 4.390 0.000 ## Po1 11.502 1.375 8.363 0.000 ## U2 8.937 4.091 2.185 0.035 ## Ineq 6.765 1.394 4.855 0.000 ## Prob -3801.836 1528.097 -2.488 0.017 ``` --- class: eg ## Crime data: Backward search using AIC ```r step.back.aic = step(M1, scope = list(lower = M0, upper = M1), direction = "backward", trace = FALSE, k = 2) # k = 2 for AIC round(summary(step.back.aic)$coef,3) ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -6426.101 1194.611 -5.379 0.000 ## M 9.332 3.350 2.786 0.008 ## Ed 18.012 5.275 3.414 0.002 ## Po1 10.265 1.552 6.613 0.000 ## M.F 2.234 1.360 1.642 0.109 ## U1 -6.087 3.339 -1.823 0.076 ## U2 18.735 7.248 2.585 0.014 ## Ineq 6.133 1.396 4.394 0.000 ## Prob -3796.032 1490.646 -2.547 0.015 ``` --- class: eg ## Crime data: `drop1` We could try to see if we can drop any variables from the backward stepwise using BIC model: ```r drop1(step.back.bic, test = "F") ``` ``` ## Single term deletions ## ## Model: ## y ~ M + Ed + Po1 + U2 + Ineq + Prob ## Df Sum of Sq RSS AIC F value Pr(>F) ## <none> 1611057 505 ## M 1 400611 2011667 513 9.95 0.0031 ** ## Ed 1 776207 2387264 521 19.27 8.1e-05 *** ## Po1 1 2817067 4428124 550 69.94 2.6e-10 *** ## U2 1 192233 1803290 508 4.77 0.0348 * ## Ineq 1 949221 2560278 525 23.57 1.9e-05 *** ## Prob 1 249308 1860365 510 6.19 0.0171 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- class: eg ## Crime data: `drop1` Similarly, we could try to see if we can drop any variables from the backward stepwise using AIC model: ```r drop1(step.back.aic, test = "F") ``` ``` ## Single term deletions ## ## Model: ## y ~ M + Ed + Po1 + M.F + U1 + U2 + Ineq + Prob ## Df Sum of Sq RSS AIC F value Pr(>F) ## <none> 1453068 504 ## M 1 296790 1749858 511 7.76 0.0083 ** ## Ed 1 445788 1898855 515 11.66 0.0015 ** ## Po1 1 1672038 3125105 538 43.73 8.3e-08 *** ## M.F 1 103159 1556227 505 2.70 0.1087 ## U1 1 127044 1580112 506 3.32 0.0762 . ## U2 1 255443 1708511 510 6.68 0.0137 * ## Ineq 1 738244 2191312 521 19.31 8.6e-05 *** ## Prob 1 247978 1701046 509 6.49 0.0151 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- class: font70 columns-2 ## References <a name=bib-Akaike1973></a>[Akaike, H.](#cite-Akaike1973) (1973). "Information theory and and extension of the maximum likelihood principle". In: _Second International Symposium on Information Theory_. Ed. by B. Petrov and F. Csaki. Budapest: Academiai Kiado, pp. 267-281. <a name=bib-Ehrlich1973></a>[Ehrlich, I.](#cite-Ehrlich1973) (1973). "Participation in Illegitimate Activities: A Theoretical and Empirical Investigation". In: _Journal of Political Economy_ 81.3, pp. 521-565. URL: [http://www.jstor.org/stable/1831025](http://www.jstor.org/stable/1831025). <a name=bib-Foster1994></a>[Foster, D. P. and E. I. George](#cite-Foster1994) (1994). "The Risk Inflation Criterion for Multiple Regression". In: _The Annals of Statistics_ 22.4, pp. 1947-1975. DOI: [10.1214/aos/1176325766](https://doi.org/10.1214%2Faos%2F1176325766). <a name=bib-Hannan1979></a>[Hannan, E. J. and B. G. Quinn](#cite-Hannan1979) (1979). "The Determination of the Order of an Autoregression". In: _Journal of the Royal Statistical Society. Series B (Methodological)_ 41.2, pp. 190-195. URL: [http://www.jstor.org/stable/2985032](http://www.jstor.org/stable/2985032). <a name=bib-Hurvich1989></a>[Hurvich, C. M. and C. Tsai](#cite-Hurvich1989) (1989). "Regression and time series model selection in small samples". In: _Biometrika_ 76.2, pp. 297-307. DOI: [10.1093/biomet/76.2.297](https://doi.org/10.1093%2Fbiomet%2F76.2.297). <a name=bib-Johnson1996></a>[Johnson, R. W.](#cite-Johnson1996) (1996). "Fitting Percentage of Body Fat to Simple Body Measurements". In: _Journal of Statistics Education_ 4.1. URL: [http://www.amstat.org/publications/jse/v4n1/datasets.johnson.html](http://www.amstat.org/publications/jse/v4n1/datasets.johnson.html). <a name=bib-Konishi1996></a>[Konishi, S. and G. Kitagawa](#cite-Konishi1996) (1996). "Generalised information criteria in model selection". In: _Biometrika_ 83.4, pp. 875-890. DOI: [10.1093/biomet/83.4.875](https://doi.org/10.1093%2Fbiomet%2F83.4.875). <a name=bib-Mallows1973></a>[Mallows, C. L.](#cite-Mallows1973) (1973). "Some Comments on Cp". In: _Technometrics_ 15.4, pp. 661-675. DOI: [10.2307/1267380](https://doi.org/10.2307%2F1267380). <a name=bib-Mueller2005></a>[Müller, S. and A. H. Welsh](#cite-Mueller2005) (2005). "Outlier robust model selection in linear regression". In: _Journal of the American Statistical Association_ 100.472, pp. 1297-1310. DOI: [10.1198/016214505000000529](https://doi.org/10.1198%2F016214505000000529). <a name=bib-Mueller2009></a>[Müller, S. and A. H. Welsh](#cite-Mueller2009) (2009). "Robust model selection in generalized linear models". In: _Statistica Sinica_ 19.3, pp. 1155-1170. <a name=bib-Rao1989></a>[Rao, R. and Y. Wu](#cite-Rao1989) (1989). "A strongly consistent procedure for model selection in a regression problem". In: _Biometrika_ 76.2, pp. 369-374. DOI: [10.1093/biomet/76.2.369](https://doi.org/10.1093%2Fbiomet%2F76.2.369). <a name=bib-Ronchetti1994></a>[Ronchetti, E. and R. G. Staudte](#cite-Ronchetti1994) (1994). "A Robust Version of Mallows's Cp". In: _Journal of the American Statistical Association_ 89.426, pp. 550-559. DOI: [10.1080/01621459.1994.10476780](https://doi.org/10.1080%2F01621459.1994.10476780). <a name=bib-Schwarz1978></a>[Schwarz, G.](#cite-Schwarz1978) (1978). "Estimating the Dimension of a Model". In: _The Annals of Statistics_ 6.2, pp. 461-464. DOI: [10.1214/aos/1176344136](https://doi.org/10.1214%2Faos%2F1176344136). <a name=bib-Shao1997></a>[Shao, J.](#cite-Shao1997) (1997). "An Asymptotic Theory for Linear Model Selection". In: _Statistica Sinica_ 7.2, pp. 221-264. <a name=bib-Shibata1984></a>[Shibata, R.](#cite-Shibata1984) (1984). "Approximate efficiency of a selection procedure for the number of regression variables". In: _Biometrika_ 71.1, pp. 43-49. DOI: [10.1093/biomet/71.1.43](https://doi.org/10.1093%2Fbiomet%2F71.1.43). <a name=bib-Sugiura1978></a>[Sugiura, N.](#cite-Sugiura1978) (1978). "Further analysts of the data by Akaike's information criterion and the finite corrections". In: _Communications in Statistics - Theory and Methods_ 7.1, pp. 13-26. DOI: [10.1080/03610927808827599](https://doi.org/10.1080%2F03610927808827599). <a name=bib-Tager1979></a>[Tager, I. B, S. T. Weiss, B. Rosner, et al.](#cite-Tager1979) (1979). "Effect of Parental Cigarette Smoking on the Pulmonary Function of Children". In: _American Journal of Epidemiology_ 110.1, pp. 15-26. <a name=bib-Takeuchi1976></a>[Takeuchi, K.](#cite-Takeuchi1976) (1976). "Distribution of informational statistics and a criterion of model fitting". In: _Suri-Kagaku [Mathematical Sciences]_ 153, pp. 12-18. <a name=bib-Yang2005></a>[Yang, Y.](#cite-Yang2005) (2005). "Can the strengths of AIC and BIC be shared? A conflict between model indentification and regression estimation". In: _Biometrika_ 92.4, pp. 937-950. DOI: [10.1093/biomet/92.4.937](https://doi.org/10.1093%2Fbiomet%2F92.4.937). <a name=bib-Zhao1986></a>[Zhao, L, P. Krishnaiah, and Z. Bai](#cite-Zhao1986) (1986). "On detection of the number of signals in presence of white noise". In: _Journal of Multivariate Analysis_ 20.1, pp. 1-25. DOI: [10.1016/0047-259X(86)90017-5](https://doi.org/10.1016%2F0047-259X%2886%2990017-5). --- class: code40 columns-2 ```r devtools::session_info(include_base = FALSE) ``` ``` ## ─ Session info ─────────────────────────────────────────────────────────────────────────────────── ## setting value ## version R version 3.5.2 (2018-12-20) ## os macOS Mojave 10.14.4 ## system x86_64, darwin15.6.0 ## ui X11 ## language (EN) ## collate en_AU.UTF-8 ## ctype en_AU.UTF-8 ## tz Australia/Sydney ## date 2019-05-13 ## ## ─ Packages ─────────────────────────────────────────────────────────────────────────────────────── ## package * version date lib source ## assertthat 0.2.1 2019-03-21 [1] CRAN (R 3.5.2) ## backports 1.1.4 2019-04-10 [1] CRAN (R 3.5.2) ## bibtex 0.4.2 2017-06-30 [1] CRAN (R 3.5.0) ## bitops 1.0-6 2013-08-17 [1] CRAN (R 3.5.0) ## broom 0.5.2 2019-04-07 [1] CRAN (R 3.5.2) ## Cairo 1.5-10 2019-03-28 [1] CRAN (R 3.5.2) ## callr 3.2.0 2019-03-15 [1] CRAN (R 3.5.2) ## caTools 1.17.1.2 2019-03-06 [1] CRAN (R 3.5.2) ## class 7.3-15 2019-01-01 [1] CRAN (R 3.5.2) ## cli 1.1.0 2019-03-19 [1] CRAN (R 3.5.2) ## cluster 2.0.8 2019-04-05 [1] CRAN (R 3.5.2) ## codetools 0.2-16 2018-12-24 [1] CRAN (R 3.5.2) ## colorspace 1.4-1 2019-03-18 [1] CRAN (R 3.5.2) ## crayon 1.3.4 2017-09-16 [1] CRAN (R 3.5.0) ## crosstalk 1.0.0 2016-12-21 [1] CRAN (R 3.5.0) ## data.table 1.12.2 2019-04-07 [1] CRAN (R 3.5.2) ## dendextend 1.10.0 2019-03-15 [1] CRAN (R 3.5.2) ## DEoptimR 1.0-8 2016-11-19 [1] CRAN (R 3.5.0) ## desc 1.2.0 2018-05-01 [1] CRAN (R 3.5.0) ## devtools 2.0.2 2019-04-08 [1] CRAN (R 3.5.2) ## digest 0.6.18 2018-10-10 [1] CRAN (R 3.5.0) ## diptest 0.75-7 2016-12-05 [1] CRAN (R 3.5.0) ## dplyr * 0.8.0.9014 2019-04-18 [1] Github (tidyverse/dplyr@792ca49) ## evaluate 0.13 2019-02-12 [1] CRAN (R 3.5.2) ## fansi 0.4.0 2018-10-05 [1] CRAN (R 3.5.0) ## flexmix 2.3-15 2019-02-18 [1] CRAN (R 3.5.2) ## foreach 1.4.4 2017-12-12 [1] CRAN (R 3.5.0) ## formatR 1.6 2019-03-05 [1] CRAN (R 3.5.2) ## fpc 2.1-11.1 2018-07-20 [1] CRAN (R 3.5.0) ## fs 1.2.7 2019-03-19 [1] CRAN (R 3.5.2) ## gclus 1.3.2 2019-01-07 [1] CRAN (R 3.5.2) ## gdata 2.18.0 2017-06-06 [1] CRAN (R 3.5.0) ## generics 0.0.2 2018-11-29 [1] CRAN (R 3.5.0) ## ggfortify * 0.4.6 2019-03-20 [1] CRAN (R 3.5.2) ## ggplot2 * 3.1.1 2019-04-07 [1] CRAN (R 3.5.2) ## glue 1.3.1 2019-03-12 [1] CRAN (R 3.5.2) ## gplots 3.0.1.1 2019-01-27 [1] CRAN (R 3.5.2) ## gridExtra 2.3 2017-09-09 [1] CRAN (R 3.5.0) ## gtable 0.3.0 2019-03-25 [1] CRAN (R 3.5.2) ## gtools 3.8.1 2018-06-26 [1] CRAN (R 3.5.0) ## heatmaply 0.15.2 2018-07-06 [1] CRAN (R 3.5.0) ## highr 0.8 2019-03-20 [1] CRAN (R 3.5.2) ## htmltools 0.3.6 2017-04-28 [1] CRAN (R 3.5.0) ## htmlwidgets 1.3 2018-09-30 [1] CRAN (R 3.5.0) ## httpuv 1.5.1 2019-04-05 [1] CRAN (R 3.5.2) ## httr 1.4.0 2018-12-11 [1] CRAN (R 3.5.0) ## iterators 1.0.10 2018-07-13 [1] CRAN (R 3.5.0) ## jpeg 0.1-8 2014-01-23 [1] CRAN (R 3.5.0) ## jsonlite 1.6 2018-12-07 [1] CRAN (R 3.5.0) ## kernlab 0.9-27 2018-08-10 [1] CRAN (R 3.5.0) ## KernSmooth 2.23-15 2015-06-29 [1] CRAN (R 3.5.2) ## knitcitations * 1.0.8 2017-07-04 [1] CRAN (R 3.5.0) ## knitr * 1.22 2019-03-08 [1] CRAN (R 3.5.2) ## labeling 0.3 2014-08-23 [1] CRAN (R 3.5.0) ## later 0.8.0 2019-02-11 [1] CRAN (R 3.5.2) ## lattice 0.20-38 2018-11-04 [1] CRAN (R 3.5.2) ## lazyeval 0.2.2 2019-03-15 [1] CRAN (R 3.5.2) ## lubridate 1.7.4 2018-04-11 [1] CRAN (R 3.5.0) ## magrittr 1.5 2014-11-22 [1] CRAN (R 3.5.0) ## MASS 7.3-51.4 2019-03-31 [1] CRAN (R 3.5.2) ## mclust 5.4.3 2019-03-14 [1] CRAN (R 3.5.2) ## memoise 1.1.0 2017-04-21 [1] CRAN (R 3.5.0) ## mime 0.6 2018-10-05 [1] CRAN (R 3.5.0) ## modeltools 0.2-22 2018-07-16 [1] CRAN (R 3.5.0) ## munsell 0.5.0 2018-06-12 [1] CRAN (R 3.5.0) ## mvtnorm 1.0-10 2019-03-05 [1] CRAN (R 3.5.2) ## nlme 3.1-139 2019-04-09 [1] CRAN (R 3.5.2) ## nnet 7.3-12 2016-02-02 [1] CRAN (R 3.5.2) ## pillar 1.3.1 2018-12-15 [1] CRAN (R 3.5.0) ## pkgbuild 1.0.3 2019-03-20 [1] CRAN (R 3.5.2) ## pkgconfig 2.0.2 2018-08-16 [1] CRAN (R 3.5.0) ## pkgload 1.0.2 2018-10-29 [1] CRAN (R 3.5.0) ## plotly 4.9.0 2019-04-10 [1] CRAN (R 3.5.2) ## plyr 1.8.4 2016-06-08 [1] CRAN (R 3.5.0) ## prabclus 2.2-7 2019-01-17 [1] CRAN (R 3.5.2) ## prettyunits 1.0.2 2015-07-13 [1] CRAN (R 3.5.0) ## processx 3.3.0 2019-03-10 [1] CRAN (R 3.5.2) ## promises 1.0.1 2018-04-13 [1] CRAN (R 3.5.0) ## ps 1.3.0 2018-12-21 [1] CRAN (R 3.5.0) ## purrr 0.3.2 2019-03-15 [1] CRAN (R 3.5.2) ## R6 2.4.0 2019-02-14 [1] CRAN (R 3.5.2) ## RColorBrewer 1.1-2 2014-12-07 [1] CRAN (R 3.5.0) ## Rcpp 1.0.1 2019-03-17 [1] CRAN (R 3.5.2) ## RefManageR 1.2.12 2019-04-03 [1] CRAN (R 3.5.2) ## registry 0.5-1 2019-03-05 [1] CRAN (R 3.5.2) ## remotes 2.0.4 2019-04-10 [1] CRAN (R 3.5.2) ## reshape2 1.4.3 2017-12-11 [1] CRAN (R 3.5.0) ## rlang 0.3.4 2019-04-07 [1] CRAN (R 3.5.2) ## rmarkdown 1.12 2019-03-14 [1] CRAN (R 3.5.2) ## robustbase 0.93-4 2019-03-19 [1] CRAN (R 3.5.2) ## rprojroot 1.3-2 2018-01-03 [1] CRAN (R 3.5.0) ## scales 1.0.0 2018-08-09 [1] CRAN (R 3.5.0) ## seriation 1.2-3 2018-02-05 [1] CRAN (R 3.5.0) ## sessioninfo 1.1.1 2018-11-05 [1] CRAN (R 3.5.0) ## shiny 1.3.2 2019-04-22 [1] CRAN (R 3.5.2) ## stringi 1.4.3 2019-03-12 [1] CRAN (R 3.5.2) ## stringr 1.4.0 2019-02-10 [1] CRAN (R 3.5.2) ## testthat 2.1.1 2019-04-23 [1] CRAN (R 3.5.2) ## tibble 2.1.1 2019-03-16 [1] CRAN (R 3.5.2) ## tidyr 0.8.3.9000 2019-04-10 [1] Github (tidyverse/tidyr@e92e74e) ## tidyselect 0.2.5 2018-10-11 [1] CRAN (R 3.5.0) ## trimcluster 0.1-2.1 2018-07-20 [1] CRAN (R 3.5.0) ## TSP 1.1-6 2018-04-30 [1] CRAN (R 3.5.0) ## usethis 1.5.0 2019-04-07 [1] CRAN (R 3.5.2) ## utf8 1.1.4 2018-05-24 [1] CRAN (R 3.5.0) ## vctrs 0.1.0.9002 2019-04-10 [1] Github (r-lib/vctrs@812a642) ## viridis 0.5.1 2018-03-29 [1] CRAN (R 3.5.0) ## viridisLite 0.3.0 2018-02-01 [1] CRAN (R 3.5.0) ## webshot 0.5.1 2018-09-28 [1] CRAN (R 3.5.0) ## whisker 0.3-2 2013-04-28 [1] CRAN (R 3.5.0) ## withr 2.1.2 2018-03-15 [1] CRAN (R 3.5.0) ## xaringan 0.9 2019-03-06 [1] CRAN (R 3.5.2) ## xfun 0.6 2019-04-02 [1] CRAN (R 3.5.2) ## xml2 1.2.0 2018-01-24 [1] CRAN (R 3.5.0) ## xtable 1.8-4 2019-04-21 [1] CRAN (R 3.5.2) ## yaml 2.2.0 2018-07-25 [1] CRAN (R 3.5.1) ## zeallot 0.1.0 2018-01-28 [1] CRAN (R 3.5.0) ## ## [1] /Library/Frameworks/R.framework/Versions/3.5/Resources/library ```